Jun27

Picture this: you've just launched a groundbreaking AI application powered by cutting-edge APIs. Your team has poured countless hours into development, and the innovation potential is limitless. But beneath the sleek interface and impressive functionality, a threat is lurking. Your AI API, the foundation of your application, is a ticking time bomb waiting to be exploited by cybercriminals. As a seasoned cybersecurity professional who has worked with numerous clients implementing AI solutions, I've seen firsthand the devastating consequences of neglecting API security.

One of the most significant risks associated with AI APIs is the potential for data breaches. AI systems rely on vast amounts of sensitive data to train their models and make predictions. If an API is not secured correctly, it can become a gateway for attackers to access this valuable data. The consequences of a data breach can be catastrophic, leading to financial losses, reputational damage, and legal liabilities.

Take, for example, a recent incident where a client's AI-powered chatbot was hijacked by attackers who used it to spread misinformation and phishing links. The attackers had discovered a vulnerability in the API's authentication system, allowing them to bypass security measures and take control of the chatbot. It was a stark reminder of how quickly an AI API can turn from an asset to a liability.

Another client, a healthcare provider, learned the hard way about the importance of data security in AI systems. They used an AI API to analyze patient data and improve treatment outcomes. However, a breach in the API exposed sensitive patient information, leading to a costly legal battle and a loss of trust among patients.

These incidents are not isolated cases. According to a recent study by IBM, the healthcare industry's average cost of a data breach is $7.13 million, the highest of any sector. In the financial industry, the average price is $5.85 million. These staggering figures underscore the need for organizations to prioritize API security in their AI implementations.

However, data breaches are just the tip of the iceberg regarding AI API risks. Another significant threat is the potential for AI systems to be manipulated or "poisoned" with malicious data. By injecting carefully crafted data into an AI system, attackers can alter its behavior and cause it to make incorrect or harmful decisions.

This type of attack was demonstrated in a recent research study, where a self-driving car's object recognition system was tricked into misidentifying stop signs as speed limit signs. The researchers used a technique called "adversarial machine learning" to create subtle modifications to the stop signs that were imperceptible to the human eye but caused the AI system to misclassify them. In a real-world scenario, this type of attack could have deadly consequences.

The complexity of AI systems also makes it difficult to audit and monitor them for security vulnerabilities. As AI models become more sophisticated, it becomes increasingly challenging to understand how they arrive at their decisions. This lack of transparency can make it harder to detect and mitigate security risks.

In one real-world example, a financial institution discovered that its AI-powered fraud detection system had been quietly manipulated over several months, allowing millions of dollars in fraudulent transactions to slip through undetected. The attackers had exploited a weakness in the AI model's training data, causing it to misclassify certain transactions as legitimate.

So, what can organizations do to mitigate these risks? The first step is recognizing that AI API security requires a different approach than traditional cybersecurity. AI systems are complex, dynamic, and often opaque, requiring specialized tools and expertise to secure effectively.

One key strategy is implementing robust authentication and access controls around AI APIs. This includes using robust, multi-factor authentication methods and implementing granular access controls based on the principle of least privilege. Organizations can reduce the risk of unauthorized access and data breaches by ensuring that only authorized users and systems can access AI APIs.

Data encryption is another critical component of AI API security. Using industry-standard encryption algorithms should encrypt sensitive data in transit and at rest. This helps protect data from interception and unauthorized access, even if a breach occurs.

Regular security audits and penetration testing are also essential for identifying and addressing vulnerabilities in AI systems. These assessments should be conducted by experienced cybersecurity professionals who understand the unique risks associated with AI APIs. Organizations can stay one step ahead of potential attackers by proactively identifying and remediating vulnerabilities.

Investing in AI-specific security tools and expertise is another key strategy. Traditional security tools and approaches may need to be revised to secure AI systems, requiring specialized monitoring and anomaly detection capabilities. Machine learning-based security tools can help organizations detect and respond to threats in real time, while explainable AI techniques can provide greater transparency into how AI models make decisions.

The most critical aspect of AI API security is fostering a culture of security awareness and collaboration. This means educating teams about the unique risks associated with AI APIs and encouraging open communication and information sharing. It also means being transparent about AI systems' limitations and potential biases and actively working to mitigate them.

One effective way to promote collaboration and awareness is to establish cross-functional teams that bring together cybersecurity, data science, and software engineering experts. These teams can work together to design and implement secure AI systems, sharing knowledge and best practices.

Another important aspect of AI API security is preparing for the worst-case scenario. Even with the most robust security measures, breaches can still occur. That's why it's essential to have a well-defined incident response plan outlining the steps to take in the event of a security incident involving AI APIs.

This plan should include procedures for containing the breach, assessing the damage, and communicating with stakeholders. It should also include provisions for conducting a thorough post-incident review to identify the root cause of the breach and implement measures to prevent similar incidents in the future.

In conclusion, AI APIs represent an incredible opportunity and a significant risk for organizations. As AI continues to transform industries and shape our world, we must prioritize API security as a top priority. By implementing strong authentication and access controls, encrypting sensitive data, conducting regular security audits, investing in AI-specific security tools and expertise, and fostering a culture of awareness and collaboration, organizations can unlock the full potential of AI while minimizing the risks.

However, the responsibility for AI API security doesn't fall solely on organizations. As individuals, we also have a role to play in safeguarding our data and holding organizations accountable for their AI practices. This means being vigilant about the AI systems we interact with, asking questions about how our data is being used and protected, and advocating for greater transparency and accountability in the development and deployment of AI.

Ultimately, the future of AI will be shaped by our choices today. By prioritizing API security and working together to mitigate the risks, we can create a future where AI is a powerful force for good, driving innovation and improving lives while protecting the data and systems that power it.

So, is your organization treating API security as a top priority in your AI initiatives? Are you prepared for the cybersecurity challenges that come with the rapid adoption of AI? The stakes are high, but with the right approach and a commitment to security, we can navigate the AI cybersecurity minefield and emerge more robust and resilient.

Keywords: AI, DevOps, Security

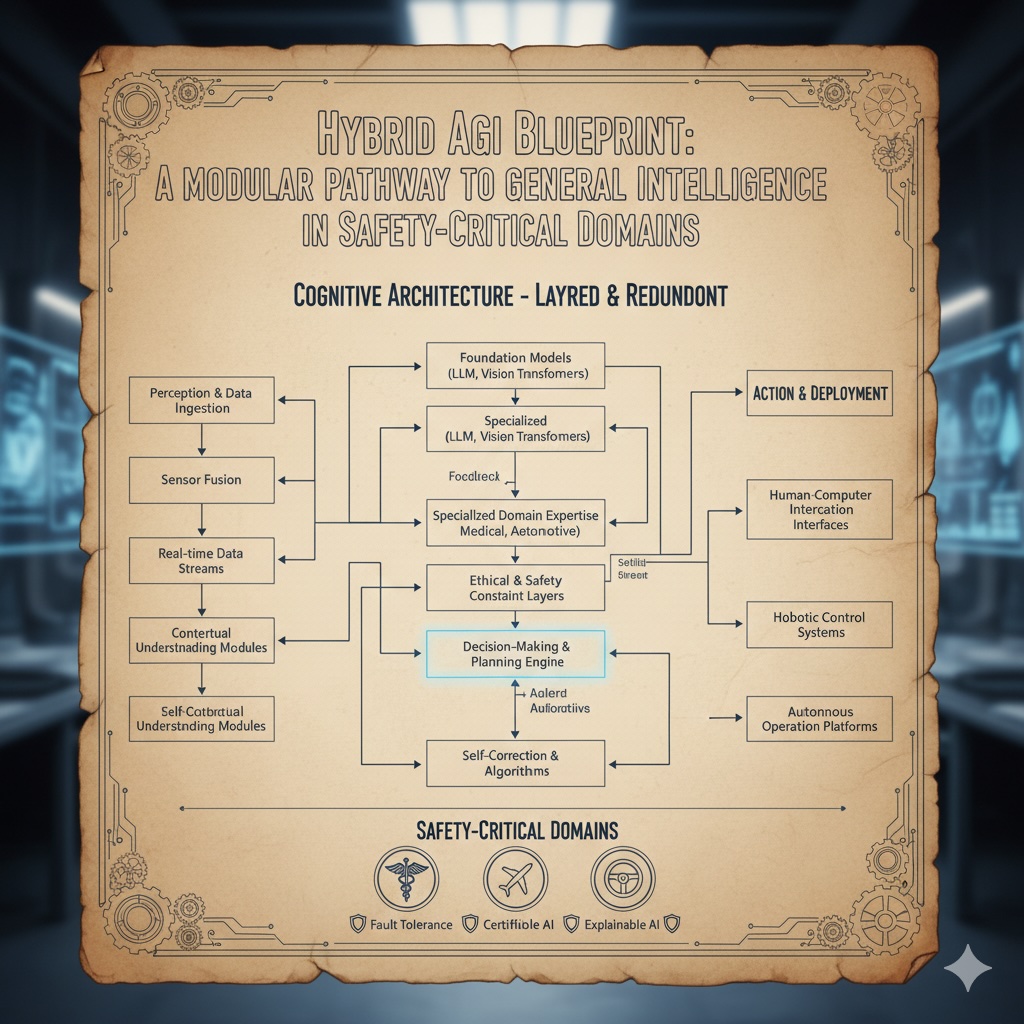

The Hybrid AGI Blueprint: A Modular Pathway to General Intelligence in Safety-Critical Domains

The Hybrid AGI Blueprint: A Modular Pathway to General Intelligence in Safety-Critical Domains Why Behavioural Science Is Replacing Traditional Marketing Strategy

Why Behavioural Science Is Replacing Traditional Marketing Strategy Building Smarter Health Systems: How Product Thinking is Transforming Healthcare

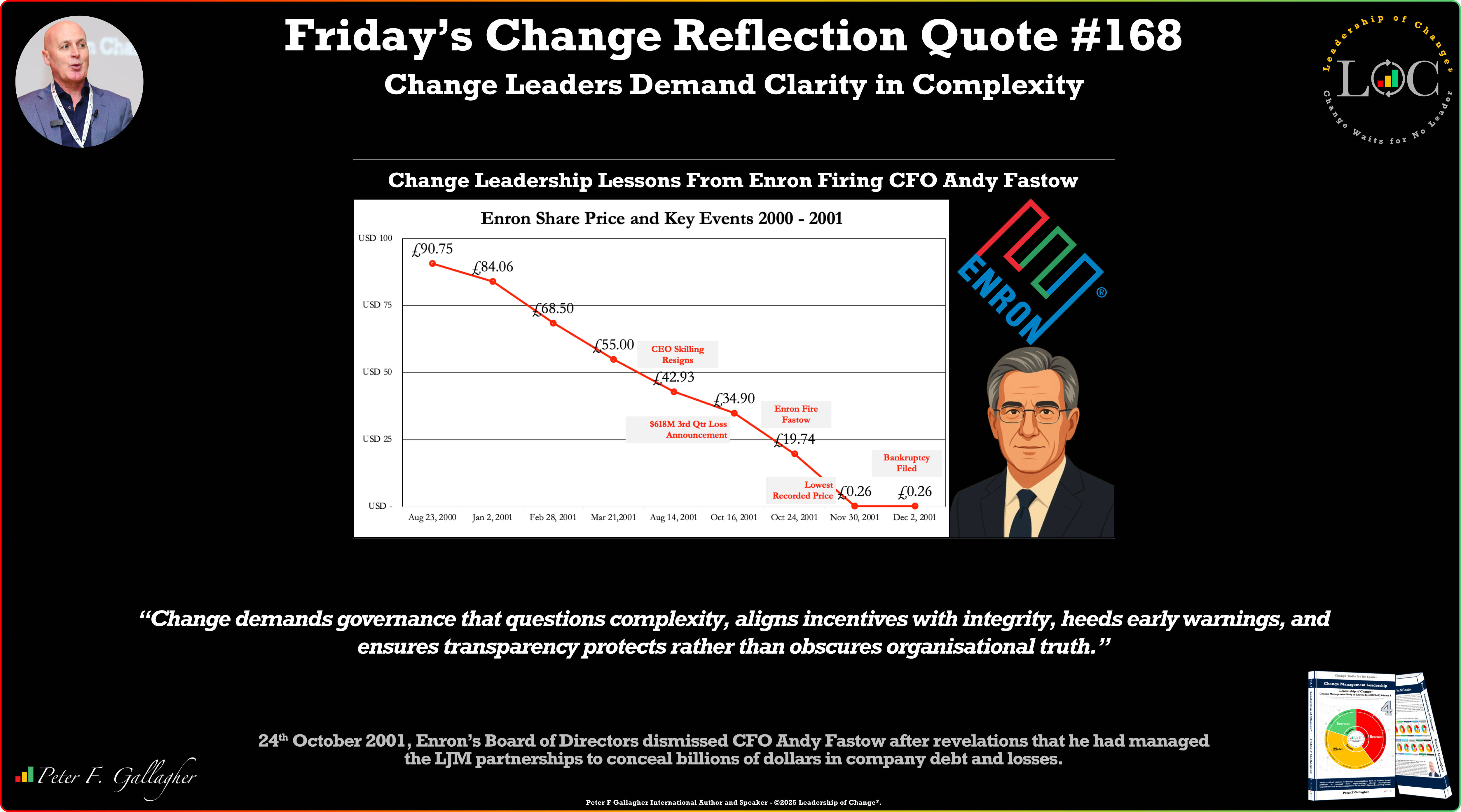

Building Smarter Health Systems: How Product Thinking is Transforming Healthcare Friday’s Change Reflection Quote - Leadership of Change - Change Leaders Demand Clarity in Complexity

Friday’s Change Reflection Quote - Leadership of Change - Change Leaders Demand Clarity in Complexity The Corix Partners Friday Reading List - October 24, 2025

The Corix Partners Friday Reading List - October 24, 2025